How to Use WAN 2.2 Animate in ComfyUI for Character Animations

Table of Contents

1. Introduction

In this tutorial, you’ll learn how to create realistic character swap animations using WAN 2.2 Animate in ComfyUI. This workflow preserves the original video’s motion and audio, then applies a reference character image on top, generating a fully lip-synced, motion-accurate animation. We’ll focus on the high-VRAM FP8 workflow, allowing you to produce smooth, high-quality results. By the end, you’ll be able to transform any video clip into a polished AI character animation — perfect for tutorials, social media content, or creative storytelling.

2. System Requirements for WAN 2.2 Animate

Before generating character swap animations, ensure your system meets the hardware and software requirements to run the WAN 2.2 Animate workflow smoothly inside ComfyUI. This setup benefits from a powerful GPU for faster processing — we recommend at least an RTX 4090 (24GB VRAM) or using a cloud GPU provider like RunPod for optimal performance.

Requirement 1: ComfyUI Installed & Updated

To get started, make sure you have ComfyUI installed locally or via a cloud setup. For Windows users, follow this guide:

👉 How to Install ComfyUI Locally on Windows

After installation, open the Manager tab in ComfyUI and click “Update ComfyUI” to ensure you’re running the latest version. Keeping ComfyUI updated guarantees compatibility with the latest workflows, nodes, and models used by WAN 2.2 Animate.

While WAN 2.2 Animate can run on a local machine, we highly recommend using our Next Diffusion - ComfyUI SageAttention template on RunPod for this workflow. Here’s why:

-

Sage Attention & Triton Acceleration — pre-installed and optimized in the RunPod template, greatly improving generation speed and VRAM efficiency.

-

Plug-and-Play Setup — no need to manually configure CUDA or PyTorch; everything is ready for WAN 2.2 Animate out-of-the-box.

-

Persistent Storage with Network Volume — keeps your models and workflows saved, so you don’t have to re-download or set up nodes each session.

You can have a ready-to-use ComfyUI instance running in just a few minutes using the RunPod template below:

👉 How to Run ComfyUI on RunPod with Network Volume

Requirement 2: Download WAN 2.2 Animate Model Files

The WAN 2.2 Animate character swap workflow relies on a combination of WAN-based animation models, FP8 optimizations, and LoRA refinements to preserve motion and lip-sync while applying a reference character image.

Download each of the following models and place them in their respective ComfyUI directories exactly as listed below:

| File Name | Hugging Face Download Page | File Directory |

|---|---|---|

| Wan2_2-Animate-14B_fp8_e4m3fn_scaled_KJ.safetensors | 🤗 Download | ..\ComfyUI\models\diffusion_models |

| Wan2_1_VAE_bf16.safetensors | 🤗 Download | ..\ComfyUI\models\vae |

| clip_vision_h.safetensors | 🤗 Download | ..\ComfyUI\models\clip_vision |

| umt5-xxl-enc-bf16.safetensors | 🤗 Download | ..\ComfyUI\models\text_encoders |

| lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors | 🤗 Download | ..\ComfyUI\models\loras |

| wan2.2_animate_14B_relight_lora_bf16.safetensors | 🤗 Download | ..\ComfyUI\models\loras |

| yolov10m.onnx | 🤗 Download | ..\ComfyUI\models\detection |

| vitpose-l-wholebody.onnx | 🤗 Download | ..\ComfyUI\models\detection |

💡The detection folder does not exist by default in ComfyUI. Make sure to create the ..\ComfyUI\models\detection folder before placing the detection models.

Once all models are downloaded and placed in the correct folders, ComfyUI will automatically detect them at startup. This ensures that WAN 2.2 Animate’s character swap, motion, and audio-processing nodes load correctly, enabling smooth, high-quality animations with accurate lip-sync and reference image application.

Requirement 3: Verify Folder Structure

Before running the WAN 2.2 Animate character swap workflow, make sure all downloaded model files are placed in the correct ComfyUI subfolders. Your folder structure should look exactly like this:

ts1📁 ComfyUI/ 2└── 📁 models/ 3 ├── 📁 diffusion_models/ 4 │ └── Wan2_2-Animate-14B_fp8_e4m3fn_scaled_KJ.safetensors 5 ├── 📁 vae/ 6 │ └── Wan2_1_VAE_bf16.safetensors 7 ├── 📁 clip_vision/ 8 │ └── clip_vision_h.safetensors 9 ├── 📁 text_encoders/ 10 │ └── umt5-xxl-enc-bf16.safetensors 11 ├── 📁 loras/ 12 │ ├── lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors 13 │ └── wan2.2_animate_14B_relight_lora_bf16.safetensors 14 └── 📁 detection/ 15 ├── yolov10m.onnx 16 └── vitpose-l-wholebody.onnx

Once everything is installed and organized, you’re ready to load the WAN 2.2 Animate character swap workflow in ComfyUI and start generating smooth, expressive animations.

Thanks to the LightX2V LoRA, the process can be completed in just four steps, delivering natural motion and perfect lip-sync. The Relight LoRA enhances the animation by applying realistic lighting and color tones to the character, ensuring it blends seamlessly into the scene.

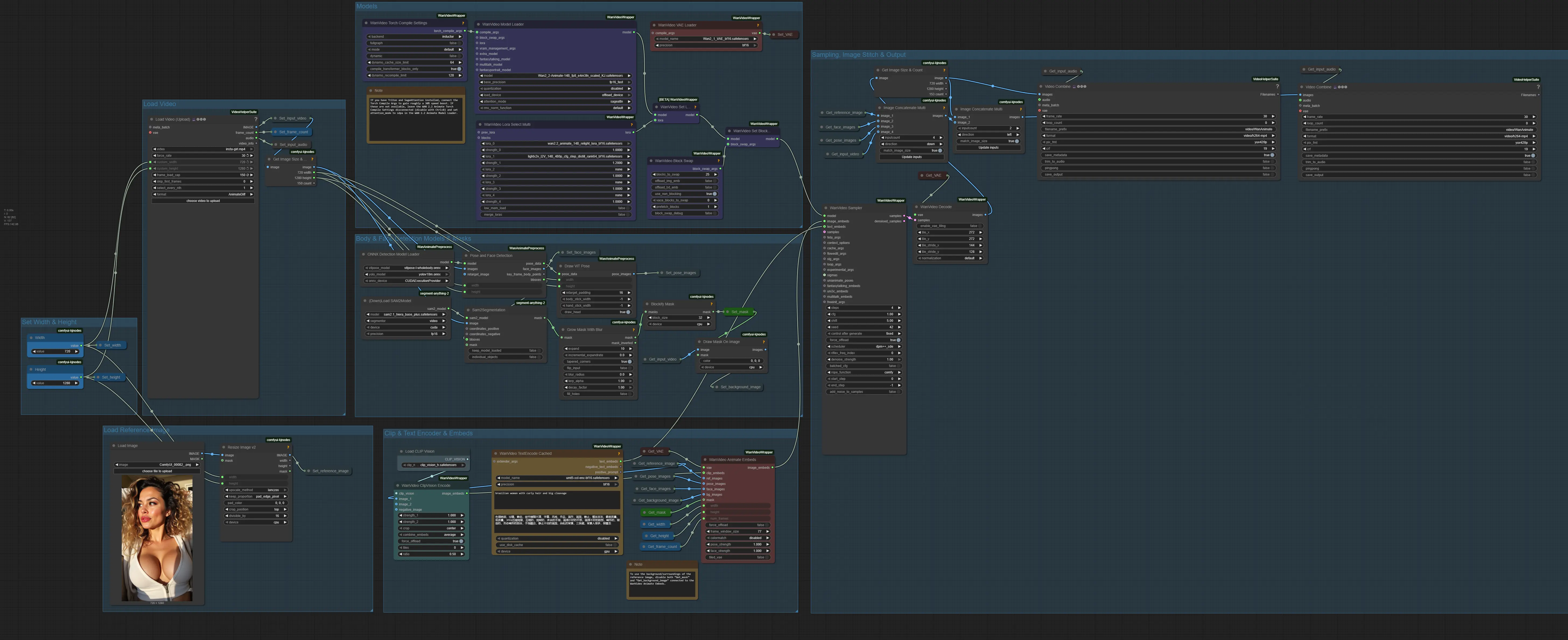

3. Download & Load the WAN 2.2 Animate Character Swap Workflow

Now that your environment and model files are set up, it’s time to load and configure the WAN 2.2 Animate Character Swap Workflow in ComfyUI. This setup ensures all model connections, LoRAs, encoders, and motion/detection nodes work together seamlessly for high-quality character swap animations that preserve the original video’s motion and audio while applying your reference character image. Once configured, you’ll be ready to bring any video clip to life with smooth, expressive animation.

Load the WAN 2.2 Animate Workflow JSON File

👉 Download the WAN 2.2 Animate JSON file and drag it directly into your ComfyUI canvas.

This workflow comes fully pre-arranged with all essential nodes, model references, and detection/audio components required for realistic, frame-accurate character swap animations.

Install Missing Nodes

If any nodes appear in red, it means certain custom nodes are missing.

To fix this:

-

Open the Manager tab in ComfyUI.

-

Click Install Missing Custom Nodes.

-

After installation, restart ComfyUI to apply the changes.

This will ensure all WAN 2.2 Animate, LightX2V LoRA, Relight LoRA, and detection nodes are properly installed and ready to process your video and reference image seamlessly.

Once all nodes load correctly and your workflow opens without errors, you’re ready to upload your source video clip and the reference character image into the designated nodes. WAN 2.2 Animate will automatically preserve the original motion and audio while applying the reference character. The LightX2V LoRA handles the animation in four steps, and the Relight LoRA ensures realistic lighting and color tones, producing a high-fidelity, visually integrated character swap animation.

4. Running the WAN 2.2 Animate Character Swap Workflow

Upload Your Video

Start by loading your input video into the Upload Video node. This video serves as the base for motion and facial and body tracking.

-

For this tutorial, we’ll use a vertical 9:16 video at 720 × 1280 resolution.

-

Set force_rate to 30 to match your video’s FPS.

-

Set frame_load_cap to 150, giving a 5-second clip at 30 FPS (30 × 5 = 150).

⚡ Pro Tip: Begin with a lower frame_load_cap (total frames of the video) to minimize the risk of OOM errors. You can increase it later as needed. On an RTX 4090, around 240 frames was the maximum before running into memory limits at this resolution.

| Parameter | Value | Notes |

|---|---|---|

| Width | 720 | Matches vertical portrait video |

| Height | 1280 | Preserves 9:16 aspect ratio |

| FPS (force_rate) | 30 | Matches source video FPS |

| frame_load_cap | 150 | 5-second video; adjust for longer clips |

Upload Your Reference Image

Next, upload the reference image into the Load Image node. Make sure the image uses the same 9:16 aspect ratio as your video.

### Set Text Prompt

### Set Text Prompt

Use the Text Encode node to provide a brief description of the reference character. This helps guide subtle facial expressions and appearance.

Example prompt for this tutorial:

"Brazilian woman with curly hair, big cleavage"

WAN 2.2 Animate Sampler & Video Combine Node

In the WAN 2.2 Animate Sampler node, set steps to 4 because of the LightX2V LoRA workflow. This ensures smooth animation while keeping processing efficient. In the Video Combine node, set the frame_rate to 30 to match your original video.

| Node | Parameter | Value | Notes |

|---|---|---|---|

| WAN 2.2 Animate Sampler | Steps | 4 | Uses LightX2V LoRA 4-steps |

| Video Combine | frame_rate | 30 | Matches video FPS |

Run the Generation

Once your video, reference image, text prompt, and sampler settings are configured:

-

Run the workflow.

-

WAN 2.2 Animate will preserve the original video’s motion and audio while applying the reference character.

-

The LightX2V LoRA processes the animation in four steps, and the Relight LoRA ensures realistic lighting and integration into the scene.

The result is a smooth, high-fidelity character swap animation ready for social media, creative projects, or presentations.

⚡ Tip: On an RTX 4090 (24GB VRAM), a 5-second clip at 720 × 1280 takes roughly ~450 seconds. For this workflow i highly recommend using a cloud GPU provider like RunPod.

5. Replacing the Background in WAN 2.2 Animate

In addition to character swapping, WAN 2.2 Animate also allows you to replace the background of the original video while preserving the subject’s pose and audio. This lets you fully transform the scene or place your character into a new environment.

To do this, simply bypass or disable the following nodes that feed into the WanVideo Animate Embeds:

-

Get_background_image

-

Get_mask

You can disable a node by selecting it and pressing Ctrl + B in ComfyUI.

Once disabled, the workflow will ignore the original video background and instead use your reference image as the new scene backdrop. This approach works great for creating stylized environments, AI cinematic shots, or custom settings that better fit your animation’s mood or story — all while keeping the original motion and audio perfectly intact. Below are some examples showcasing results with and without background replacement.

Once disabled, the workflow will ignore the original video background and instead use your reference image as the new scene backdrop. This approach works great for creating stylized environments, AI cinematic shots, or custom settings that better fit your animation’s mood or story — all while keeping the original motion and audio perfectly intact. Below are some examples showcasing results with and without background replacement.

6. Conclusion

Congratulations! You’ve successfully learned how to use WAN 2.2 Animate in ComfyUI to perform AI character swap animation with realistic motion, lip-sync, and lighting. Using the LightX2V LoRA for smooth 4-step video generation and the Relight LoRA for natural scene integration, you can now transform any video into high-quality, expressive character animations. Experiment with different reference images and styles to create stunning AI-powered video transformations that stand out across social media and creative projects.